Here, we discuss the development of the Helen platform as part of Yunzhi’s MSc project, which helps users overcome barriers to achieving academic and career goals. It highlights key findings from participatory design sessions with British and Arab users, revealing shared preferences and cultural differences in web interaction.

Background:

Global Share is a community initiative to build a social enterprise driven by the disparities in software development and access to online information between English-speaking and non-English-speaking regions (Graham et al., 2014; Jagne & Smith-Atakan, 2006). It also draws from the experiences of Helen Keller. The enterprise is developing the Helen platform to help users hindered by language and regional barriers achieve their academic and career goals. The platform will provide various services, including high-quality resources from professionals and a social network of like-minded individuals. Currently, the platform targets users in the UK and Arab regions but is in the early stages of development and facing cross-cultural challenges.

By conducting interviews with stakeholders, we gained insights into the development status of Helen. Based on the latest research on cross-cultural participatory design, we applied methods to address these issues. Several cross-cultural participatory design experiments were conducted, offering critical insights into how British and Arab cultures differ regarding web interaction and participatory design processes.

Methods:

Participatory design aims to reduce the power imbalance between designers and users by giving users a platform to voice their opinions (Merritt & Stolterman, 2012). However, as this approach has gained popularity, its original intent of balancing power has been diluted, especially in cross-cultural contexts (Mainsah & Morrison, 2014). Research also shows that cultural differences can influence preferences in web and interaction design (Alsswey & Al-Samarraie, 2021; Cyr & Trevor-Smith, 2004).

For the Helen platform, which caters to a multicultural audience, it’s crucial to use suitable participatory design methods while factoring in cultural differences in design preferences.

Our methodology is based on Hagen’s (2012) framework, which involves engaging end users in the design process—aligning perfectly with the target users of the Helen platform.

The process involved three key stages:

- Stakeholder Interviews – To understand the current development of Helen.

- Participatory Design Workshops– Conducted separately for British and Arab users.

- Cross-Cultural Design Experiments – To explore how cultural differences impact design preferences.

To ensure an equitable, participatory design process, we considered cross-cultural factors such as language diversity (Cardinal et al., 2020), design preferences (Alsswey & Al-Samarraie, 2021), and scenario-driven design (Okamoto et al., 2007). We also followed the Design for Care principles (Rossitto et al., 2021) and incorporated four critical participatory design principles and techniques:

- Speak with Comfort – Ensuring participants feel comfortable voicing their opinions.

- Creating a supportive environment where participants feel safe and respected is essential for fostering open dialogue and ensuring that all voices are heard, regardless of cultural or language differences.

- Reverse Brainstorming – Encouraging participants to solve problems by exploring what could go wrong.

- This technique challenges participants to think of potential obstacles or adverse outcomes, allowing for creative problem-solving by addressing issues from an opposing perspective and turning them into actionable insights.

- Visual Aids – Using visual aids to facilitate more transparent communication.

- Visual tools, such as diagrams, sketches, or prototypes, help bridge language gaps and enable participants to express their ideas more clearly, making complex concepts easier to understand across cultures.

- Think for the Community – Fostering a collaborative mindset.

- Encouraging participants to focus on collective goals rather than individual interests helps create solutions that benefit the wider community, promoting a spirit of teamwork and shared ownership of the design process.

Miro was used as the platform to conduct these participatory design processes.

Results:

The participatory design sessions revealed some interesting insights. Contrary to the differences anticipated based on previous research, users from the UK and Arab regions shared similar design preferences in many areas, including:

- A preference for visual information.

- A strong desire for community interaction.

- Goal sharing and a preference for reward systems.

However, there were distinct cultural differences in specific design elements:

- Web Design: UK users preferred higher information density, while Arab users favoured a cleaner, less crowded interface.

- Interaction Design: British users preferred clear goal-setting guidance, whereas Arab users valued more flexible goal incentives.

Additionally, cross-cultural differences were observed in the participatory design sessions themselves. British users expressed their opinions more independently and were less likely to adjust their views in response to others. In contrast, Arab users tended to adapt their opinions based on group dynamics and showed a greater enthusiasm for open discussions.

Discussion:

The findings indicate that Helen may not require two entirely distinct design versions for UK and Arab users, as there are many shared preferences. However, variations in interaction design suggest that some culturally tailored features may enhance the user experience for different regions.

The participatory design sessions also highlighted different collaboration habits between the two cultures, underscoring the need for a thoughtful, care-focused approach in cross-cultural design. Ensuring that all participants feel comfortable and empowered to express their ideas is critical to developing software that meets the diverse needs of a global audience. Future research should continue to explore how cross-cultural participatory design can be refined to further address these differences. It’s also important to note that these results are constrained by the limited timeframe of the three-month MSc project and the relatively small size of the data generated, which may not fully capture the diverse preferences within the broader populations.

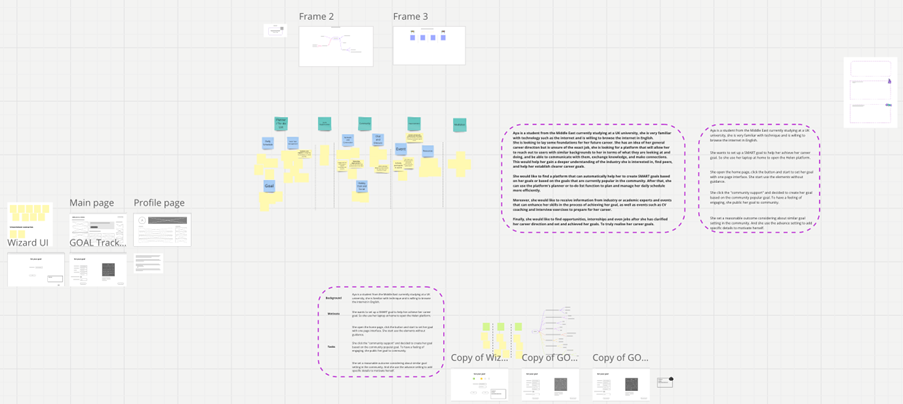

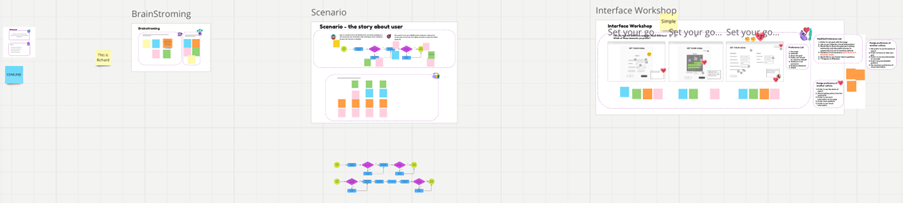

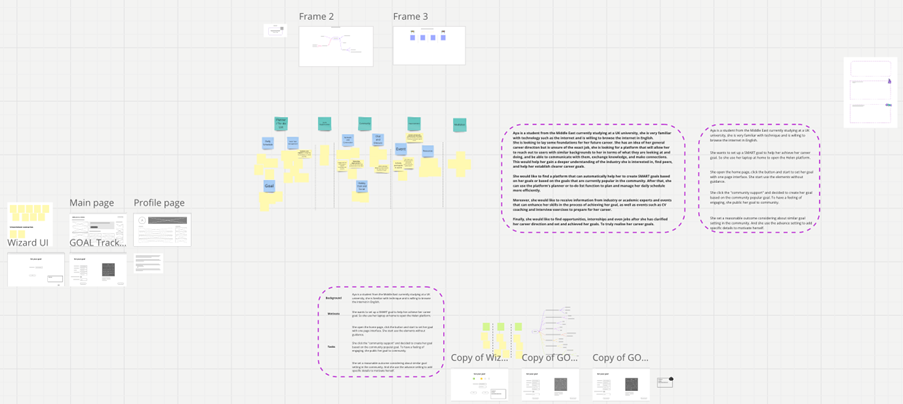

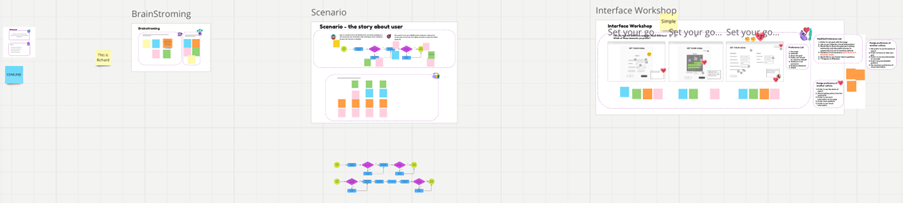

Screenshots:

- Board S: Stakeholder Interviews

- Board A: Arabic Participatory Design Session

- Board B: UK Participatory Design Session

Conclusion:

This project provided insights into cross-cultural design preferences and collaboration habits between British and Arab users, contributing to the development of Helen. While the findings suggest more similarities than differences in design preferences, specific cultural nuances in interaction design warrant further attention. By using participatory design methods, the project identified factors for improving cross-cultural collaboration in design and sets interesting possiblilties for future research and development.

As a collaborative project with Global Share, this research offers practical guidance for designing platforms like Helen that cater to diverse cultural audiences.

About the Researcher:

Yunzhi Xu holds a Master’s degree in Human-Computer Interaction (HCI) from the University of St Andrews and a Bachelor’s in Computer Science and Technology. He currently works as a Product Manager in the fintech industry, focusing on user experience and interaction design for financial software. His interests lie in user-centered design and participatory design, with plans to explore HCI practices further within fintech. Yunzhi is also considering pursuing a PhD in the future.

The Researcher’s Reflection on the Project: As an individual with an Asian background, participating in a project focused on British and Arab cultures presented unique challenges, especially in communication. However, I soon discovered that cultural differences often fade in the context of open and respectful dialogue, which led to productive stakeholder meetings.

One challenge I faced was scheduling design sessions with participants across multiple time zones. To address this, I used online polling to coordinate availability, which made it easier to respect everyone’s time and manage sessions effectively.

Reflection on Support:

I am immensely grateful for the guidance provided by Dr Miguel and Dr Ardati. Their weekly meetings, filled with insightful conversations and new research articles, helped me gain fresh perspectives on my project. Their support was invaluable in navigating the challenges I encountered and contributed to my growth as both a researcher and a professional.

Contact Information:

Dr Ardati‘s Reflection:

I am proud of the work done on this project, which tackles an important and often overlooked issue in participatory design—bridging cultural differences in a meaningful way. The research highlights the complexity and value of designing platforms for users from diverse backgrounds, offering important insights into how British and Arab cultures interact with digital interfaces. I was particularly impressed by the student’s application of cross-cultural participatory design methods, which revealed both unexpected similarities and nuanced differences. Throughout the project, the Yunzhi demonstrated exceptional diligence, consistently delivering high-quality work and showing a clear commitment to understanding the impact of cultural dynamics on design. Their performance reflects not only strong academic rigor but also a deep curiosity and respect for the diverse perspectives of the stakeholders involved.

Additional References:

- Alsswey, A., & Al-Samarraie, H. (2021). The role of Hofstede’s cultural dimensions in the design of user interface: The case of Arabic. AI EDAM, 35(1), 116–127. https://doi.org/10.1017/S0890060421000019

- Cardinal, A., Gonzales, L., & J. Rose, E. (2020). Language as Participation: Multilingual User Experience Design. Proceedings of the 38th ACM International Conference on Design of Communication, 1–7. https://doi.org/10.1145/3380851.3416763

- Cyr, D., & Trevor-Smith, H. (2004). Localization of Web design: An empirical comparison of German, Japanese, and United States Web site characteristics. Journal of the American Society for Information Science and Technology, 55(13), 1199–1208. https://doi.org/10.1002/asi.20075

- Graham, M., Hogan, B., Straumann, R. K., & Medhat, A. (2014). Uneven Geographies of User-Generated Information: Patterns of Increasing Informational Poverty. Annals of the Association of American Geographers, 104(4), 746–764. https://doi.org/10.1080/00045608.2014.910087

- Hagen, P., Collin, P., Metcalf, A., Nicholas, M., Rahilly, K., & Swainston, N. (2012). Participatory design of evidence-based online youth mental health promotion, intervention and treatment. https://researchdirect.westernsydney.edu.au/islandora/object/uws%3A18814/

- Jagne, J., & Smith-Atakan, A. S. G. (2006). Cross-cultural interface design strategy. Universal Access in the Information Society, 5(3), 299–305. https://doi.org/10.1007/s10209-006-0048-6

- Mainsah, H., & Morrison, A. (2014). Participatory design through a cultural lens: Insights from postcolonial theory. Proceedings of the 13th Participatory Design Conference: Short Papers, Industry Cases, Workshop Descriptions, Doctoral Consortium Papers, and Keynote Abstracts – Volume 2, 83–86. https://doi.org/10.1145/2662155.2662195

- Merritt, S., & Stolterman, E. (2012). Cultural hybridity in participatory design. Proceedings of the 12th Participatory Design Conference: Exploratory Papers, Workshop Descriptions, Industry Cases – Volume 2, 73–76. https://doi.org/10.1145/2348144.2348168

- Mushtaha, A., & De Troyer, O. (2007). Cross-Cultural Understanding of Content and Interface in the Context of E-Learning Systems. In N. Aykin (Ed.), Usability and Internationalization. HCI and Culture (pp. 164–173). Springer. https://doi.org/10.1007/978-3-540-73287-7_21

- Okamoto, M., Komatsu, H., Gyobu, I., & Ito, K. (2007). Participatory Design Using Scenarios in Different Cultures. Human-Computer Interaction. Interaction Design and Usability, 223–231. https://doi.org/10.1007/978-3-540-73105-4_25

- Rossitto, C., Korsgaard, H., Lampinen, A., & Bødker, S. (2021). Efficiency and Care in Community-led Initiatives. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 467:1-467:27. https://doi.org/10.1145/3479611