Congratulations to Hui-Shyong Yeo, who has been selected as both an ACM SIGCHI communication ambassador and to represent SIGCHI at the ACM 50 Years of the A.M. Turing Award Celebration.

Yeo is a 2nd year PhD student and is particularly interested in exploring and developing novel interaction techniques. Since joining us in SACHI, he has had work accepted at ACM CHI 2016 and CHI 2017, ACM MobileHCI 2016 and 2017 and ACM UIST 2016. His work has featured at Google I/O 2016, locally on STV news and he gave a talk at Google UK in 2016 about his research. His work has also featured in the media including in Gizmodo, TheVerge, Engadget and TechCrunch., see his personal website for more details. MORE

Yeo is a 2nd year PhD student and is particularly interested in exploring and developing novel interaction techniques. Since joining us in SACHI, he has had work accepted at ACM CHI 2016 and CHI 2017, ACM MobileHCI 2016 and 2017 and ACM UIST 2016. His work has featured at Google I/O 2016, locally on STV news and he gave a talk at Google UK in 2016 about his research. His work has also featured in the media including in Gizmodo, TheVerge, Engadget and TechCrunch., see his personal website for more details. MORE

News

When: Tuesday 11th April

Time: 14:00 – 15:00

Where: Cole 1.33A

Title: Co-Designed, Collocated & Playful Mobile Interactions

Abstract: Mobile devices such as smartphones and tablets were originally conceived and have traditionally been utilized for individual use. Research on mobile collocated interactions has explored situations in which collocated users engage in collaborative activities using their mobile devices, thus going from personal/individual toward shared/multiuser experiences and interactions.

MORE

Event details

- When: 11th April 2017 14:00 - 15:00

- Where: Cole 1.33a

When: Monday 3rd April 2017, 14:00 – 15:00

Where: Jack Cole 1.33a

Title: Social interaction characteristics for socially acceptable robots

Abstract: The last decade has seen fast advances in Social Robotic Technology. Social Robots start to be successfully used as robot companions and as therapeutic aids. In both of these cases the robots need to be able to interact intuitively and comfortably with their human users in close physical proximity.

MORE

Uta Hinrichs, Tevor Hogan, Eva Horneker

Overview

Information visualization has become a popular tool to facilitate sense-making, discovery and communication in a large range of professional and casual contexts. However, evaluating visualizations is still a challenge. In particular, we lack techniques to help understand how visualizations are experienced by people. In this paper we discuss the potential of the Elicitation Interview technique to be applied in the context of visualization. The Elicitation Interview is a method for gathering detailed and precise accounts of human experience. We argue that it can be applied to help understand how people experience and interpret visualizations as part of exploration and data analysis processes. We describe the key characteristics of this interview technique and present a study we conducted to exemplify how it can be applied to evaluate data representations. Our study illustrates the types of insights this technique can bring to the fore, for example, evidence for deep interpretation of visual representations and the formation of interpretations and stories beyond the represented data. We discuss general visualization evaluation scenarios where the Elicitation Interview technique may be beneficial and specify what needs to be considered when applying this technique in a visualization context specifically.

Publications

Trevor Hogan, Uta Hinrichs, Eva Hornecker. The Elicitation Interview Technique: CapturingPeople’s Experiences of Data Representations. IEEE Transactions on Visualization and Computer Graphics, 2016.

Last year we were awarded a Microsoft Surface hub and funding by Microsoft Research and Microsoft. This was based on our Academic Research Request Proposal for the “Intelligent Canvas for Data Analysis and Exploration”. We are pleased to announce our Surface Hub Crucible program for the summer of 2017 here in SACHI in the University of St Andrews.

Last year we were awarded a Microsoft Surface hub and funding by Microsoft Research and Microsoft. This was based on our Academic Research Request Proposal for the “Intelligent Canvas for Data Analysis and Exploration”. We are pleased to announce our Surface Hub Crucible program for the summer of 2017 here in SACHI in the University of St Andrews.

MORE

Title: An argumentation-based approach to facilitate and improve human reasoning.

Abstract: The ability of understanding and reasoning about different alternatives for a decision is fundamental for making informed choices. Intelligent autonomous systems have the potential to improve the quality of human-decision making but the use of such systems may be hampered by human difficulties to interact and trust their outputs.

MORE

Title: The design of digital technologies to support transitional events in the human lifespan

Abstract: This talk will focus on (i) qualitative research undertaken to understand how digital technologies are being used during transitional periods across the human lifespan, such as becoming an adult, romantic breakup, and end of life, and (ii) the opportunities for technology design that have emerged as a result. Areas of focus include presentation of self online, group social norms, and the problematic nature of ‘ownership’ of digital materials.

MORE

Title: The Collaborative Design of Tangible Interactions in Museums

Abstract: Interactive technology for cultural heritage has long been a subject of study for Human-Computer Interaction. Findings from a number of studies suggest that, however, technology can sometime distance visitors from heritage holdings rather than enabling people to establish deeper connections to what they see. Furthermore, the introduction of innovative interactive installations in museum is often seen as an interesting novelty but seldom leads to substantive change in how a museum approaches visitor engagement. This talk will discuss work on the EU project “meSch” (Material EncounterS with Digital Cultural Heritage) aimed at creating a do-it-yourself platform for cultural heritage professionals to design interactive tangible computing installations that bridge the gap between digital content and the materiality of museum objects and exhibits. The project has adopted a collaborative design approach throughout, involving cultural heritage professionals, designers, developers and social scientist. The talk will feature key examples of how collaboration unfolded and relevant lessons learned, particularly regarding the shared envisioning of tangible interaction concepts at a variety of heritage sites including archaeology and art museums, hands-on exploration centres and outdoor historical sites.

Biography: Dr. Luigina Ciolfi is Reader in Communication at Sheffield Hallam University. She holds a Laurea (Univ. of Siena, Italy) and a PhD (Univ. of Limerick, Ireland) in Human-Computer Interaction. Her research focuses on understanding and designing for human situated practices mediated by technology in both work and leisure settings, particularly focusing on participation and collaboration in design. She has worked on numerous international research projects on heritage technologies, nomadic work and interaction in public spaces. She is the author of over 80 peer-reviewed publications, has been an invited speaker in ten countries, and has advised on research policy around digital technologies and cultural heritage for several European countries. Dr. Ciolfi serves in a number of scientific committees for international conferences and journals, including ACM CHI, ACM CSCW, ACM GROUP, ECSCW, COOP and the CSCW Journal. She is a member of the EUSSET (The European Society for Socially Embedded Technologies) and of the ACM CSCW Steering Groups. Dr. Ciolfi is a senior member of the ACM. Full information on her work can be found at http://luiginaciolfi.com

A display of life-saving medical technology by University of St Andrews researchers stole the show at the annual Universities Scotland reception for MSPs in Scottish Parliament within the Garden Lobby at Holyrood last week.

Dr David Harris-Birtill, founder of Beyond Medics, and David Morrison had a steady stream of politicians eager to try out a working prototype of their ground-breaking Automated Remote Pulse Oximetry system which automatically displays the individual’s vital signs – heart rate and blood oxygenation level – through a remote camera, without the need for clips and wires.

Dr David Harris-Birtill (left) and David Morrison (centre) with Edinburgh South MSP Daniel Johnson (right), a St Andrews alumnus.

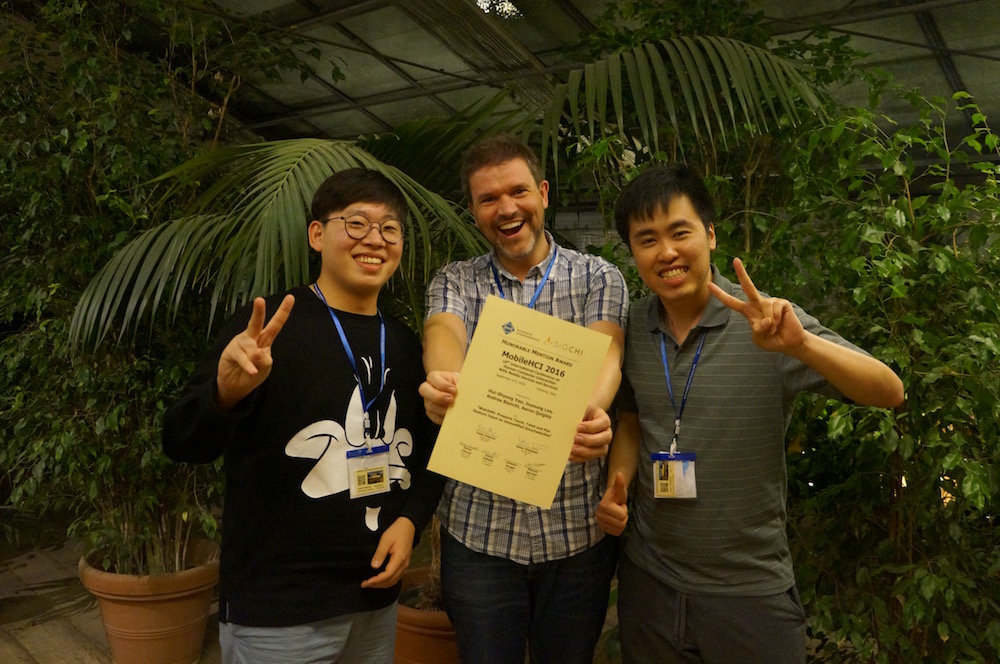

Congratulations to Hui-Shyong Yeo, Aaron Quigley and colleagues, who won best paper honorable mention award for the paper WatchMI at MobileHCI 2016.

Yeo also attended the Doctoral Consortium and demoed the WatchMI during the demo session.

The “WatchMI: pressure touch, twist and pan gesture input on unmodified smartwatches” paper which appears in the Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI’16) can be accessed via:

- Directly to the ACM Digital Library page for WatchMI

- The ACM SIGCHI OpenTOC page for the MobileHCI 2016 (search for WatchMI), for free until Sep 2017

The Doctor Consortium and Demo paper can be accessed via:

- Directly to the ACM Digital Library page for Single-handed interaction for mobile and wearable computing

- Directly to the ACM Digital Library page for WatchMI’s applications

- The ACM SIGCHI OpenTOC page for the MobileHCI 2016 (search for WatchMI), for free until Sep 2017