<!–Speaker: Aaron Quigley and Daniel Rough, University of St Andrews

Date/Time: 12-1pm May 20, 2014

Location: Jack Cole 1.33a–>

Title: AwToolkit: Attention-Aware User Interface Widgets

Authors: Juan-Enrique Garrido, Victor M. R. Penichet, Maria-Dolores Lozano, Aaron Quigley, Per Ola Kristensson.

Abstract: Increasing screen real-estate allows for the development of applications where a single user can manage a large amount of data and related tasks through a distributed user inter- face. However, such users can easily become overloaded and become unaware of display changes as they alternate their attention towards different displays. We propose Aw- Toolkit, a novel widget set for developers that supports users in maintaing awareness in multi-display systems. The Aw- Toolkit widgets automatically determine which display a user is looking at and provide users with notifications with different levels of subtlety to make the user aware of any unattended display changes. The toolkit uses four notifica- tion levels (unnoticeable, subtle, intrusive and disruptive), ranging from an almost imperceptible visual change to a clear and visually saliant change. We describe AwToolkit’s six widgets, which have been designed for C# developers, and the design of a user study with an application oriented towards healthcare environments. The evaluation results re- veal a marked increase in user awareness in comparison to the same application implemented without AwToolkit.

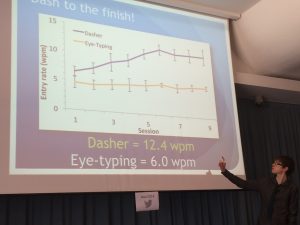

Title: An Evaluation of Dasher with a High-Performance Language Model as a Gaze Communication Method

Authors: Daniel Rough, Keith Vertanen, Per Ola Kristensson

Abstract: Dasher is a promising fast assistive gaze communication method. However, previous evaluations of Dasher have been inconclusive. Either the studies have been too short, involved too few partici- pants, suffered from sampling bias, lacked a control condition, used an inappropriate language model, or a combination of the above. To rectify this, we report results from two new evaluations of Dasher carried out using a Tobii P10 assistive eye-tracker machine. We also present a method of modifying Dasher so that it can use a state-of-the-art long-span statistical language model. Our experi- mental results show that compared to a baseline eye-typing method, Dasher resulted in significantly faster entry rates (12.6 wpm versus 6.0 wpm in Experiment 1, and 14.2 wpm versus 7.0 wpm in Exper- iment 2). These faster entry rates were possible while maintaining error rates comparable to the baseline eye-typing method. Partici- pants’ perceived physical demand, mental demand, effort and frus- tration were all significantly lower for Dasher. Finally, participants significantly rated Dasher as being more likeable, requiring less concentration and being more fun.

This seminar is part of our ongoing series from researchers in HCI. See here for our current schedule.

News

Earlier this year we held the SACHI Logo Contest. To decide on the outcome we formed a jury of 10 people, consisting of 5 academic and 5 student members of SACHI. We are pleased to announce that the best student design for our logo contest was submitted by Jason T. Jacques, which you can see to the left. Both the academic and students members of the jury selected Jason’s logo as the best student design.

Earlier this year we held the SACHI Logo Contest. To decide on the outcome we formed a jury of 10 people, consisting of 5 academic and 5 student members of SACHI. We are pleased to announce that the best student design for our logo contest was submitted by Jason T. Jacques, which you can see to the left. Both the academic and students members of the jury selected Jason’s logo as the best student design.

The logo submitted by Uta Hinrichs, while not eligible for the student prize, was the top ranked entry and has been chosen as the logo to represent SACHI going forward. Congratulations to both Jason and Uta! You can see Uta’s logo options below which we will be adding to our website (once redesigned in the months ahead). We also apologise to both Uta and Jason for reducing their submissions into these blog worthy thumbnails which do neither justice.

Members of SACHI are presenting a number of papers and other works at this year’s AVI 2014 the International Working Conference on Advanced Visual Interfaces May 27 – 30, 2014 – Como, Italy.

“Started in Rome in 1992, AVI has become a biannual appointment for a wide international community of experts with a broad range of backgrounds. Through more than two decades, the Conference has contributed to the progress of Human-Computer Interaction, offering a forum to present and disseminate new technological results, new paradigms and new visions for interaction and interfaces. AVI 2014 offers a strong scientific program that provides an articulated picture of the most challenging and innovative directions in interface design, technology, and applications. World leading researchers from industry and academia will present their work. 25 different countries are represented in 3 workshops, 32 long papers, 17 short papers, 29 posters, and 14 hands-on demos.”

The schedule below will allow you to see a sample of the Human-Computer Interaction research at the University of St Andrews.

- Wednesday, 14.00 AVI Posters and Demos

“An End-User Interface for Behaviour Change Intervention Development”,

D. Rough and A. Quigley (Univ . of St. Andrews, UK) - Thursday May 29, 2014, 8: 45 – 10.30 Paper Session Room A.2.1 Connection and Collaboration

“Paper vs. Tablets: The Effect of Document Media in Co – located Collaborative Work” [PDF] ( Full Paper)

J . Haber, S . Carpendale (Univ . of Calgary, Canada); M . Nacenta (Univ . of St. Andrews, UK) - Thursday May 29, 2014 11:00 – 12:40 Paper Sessions Room A.3 Evaluation Studies

“An Evaluation of Dasher with a High – Performance Language Model as a Gaze Communication Method” [PDF] (Full Paper)

D. Rough, P.O. Kristensson (Univ . of St. Andrews, UK); Keith Vertanen (Monta na Tech, USA) - Thursday, 15:15 – 16: 30 AVI 2014 Best Papers presented at Villa Erba, Lake Como

“AWToolkit: Attention Aware Interface Widgets” [PDF] (Full Paper)

J. E. Garrido Navarro, V.M. R. Penichet, M. D. Lozano (Univ. de Castilla – La Mancha, Spain); A. Quigley, P. O. Kristensson (Univ . of St. Andrews, UK) (Best Paper)

Finally, on Friday May 30th Aaron will be the session chair for Interface Metaphors + Social Interaction session at 9am.