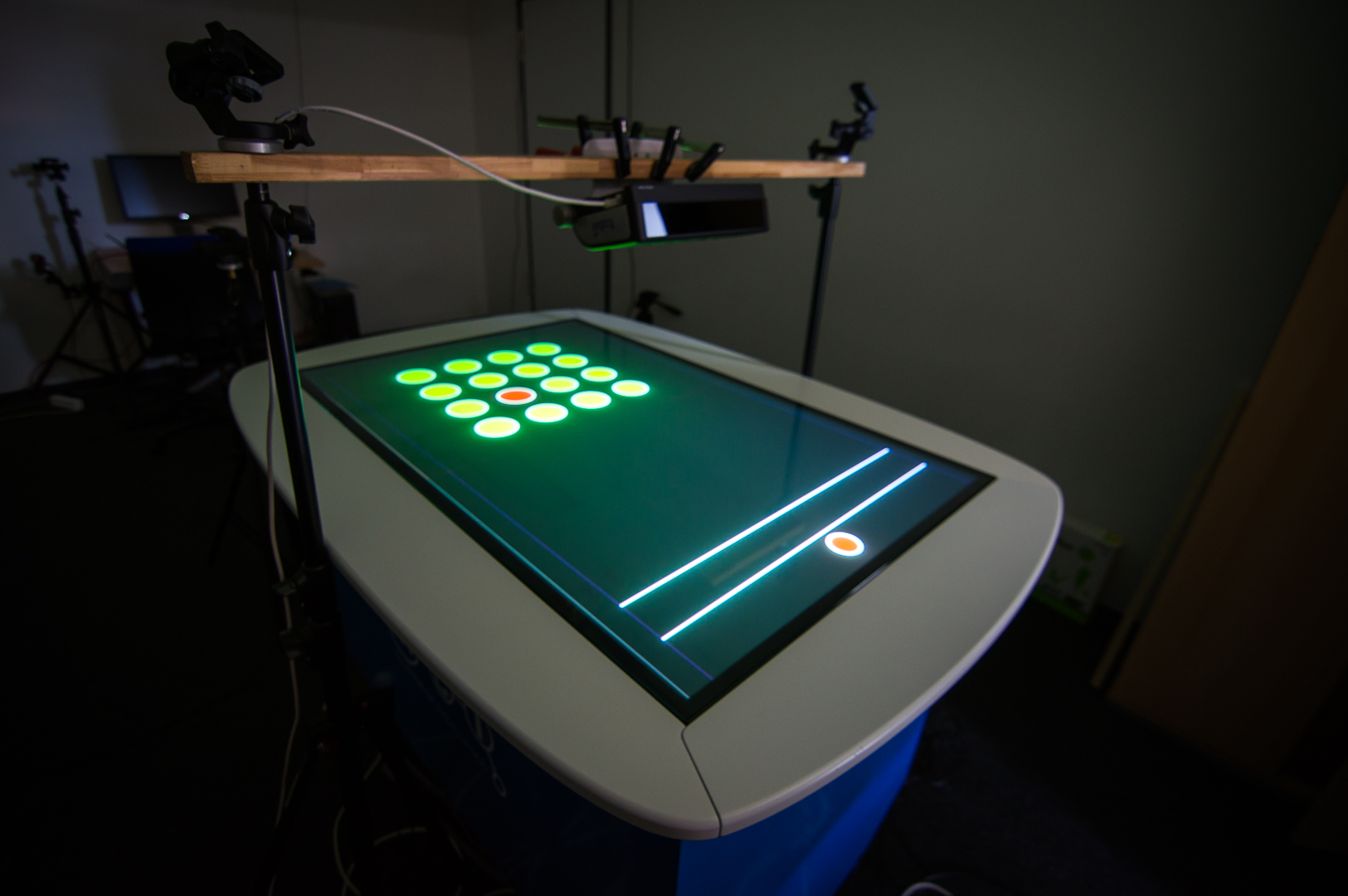

<!–Speaker: Jakub Dostal, SACHI, School of Computer Science, University of St Andrews

Date/Time: 1-2pm March 5, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Modern computer workstation setups regularly include multiple displays in various configurations. With such multi-monitor or multi-display setups we have reached a stage where we have more display real-estate available than we are able to comfortably attend to. This talk will present the results of an exploration of techniques for visualising display changes in multi-display environments. Apart from four subtle gaze-dependent techniques for visualising change on unattended displays, it will cover the technology used to enable quick and cost-effective deployment to workstations. An evaluation of the technology as well as the techniques themselves will be presented as well. The talk will conclude with a brief discussion on the challenges in evaluating subtle interaction techniques.

About Jakub:

Jakub’s bio on the SACHI website.

News

CHI is the premier international conference on human computer interaction, and this year’s event is looking to be the most exciting yet for the St Andrews Computer Human Interaction (SACHI) research group.

Contributions to this year’s event come from several group members, and SACHI will be well represented with seven researchers in attendance: Professor Aaron Quigley, Dr Per Ola Kristensson, Dr Miguel Nacenta, Jakub Dostal, Michael Mauderer, Shyam Reyal, and Jason T. Jacques.

SACHI group members co-authored three full papers this year, on topics as broad as gesture-based interaction, tactile feedback, and text entry.

Papers

- Memorability of Pre-designed and User-defined Gesture Sets

Miguel A. Nacenta, Yemliha Kamber, Yizhou Qiang, and Per Ola Kristensson- The Effects of Tactile Feedback and Movement Alteration on Interaction and Awareness with Digital Embodiments

Andre Doucette, Regan Mandryk, Carl Gutwin, Miguel Nacenta, and Andriy Pavlovych- Improving two-thumb text entry on touchscreen devices.

Antti Oulasvirta, Anna Reichel, Wenbin Li, Yan Zhang, Myroslav Bachynskyi, Keith Vertanen, and Per Ola Kristensson

In addition, Miguel Nacenta and Per Ola Kristensson will have their note published regarding the perfomance and ergonomics of multi-touch rotation manipulations.

Note

- Multi-Touch Rotation Gestures: Performance and Ergonomics

Eve Hoggan, John Williamson, Antti Oulasvirta, Miguel Nacenta, Per Ola Kristensson, and Anu Lehtiö

In a collaborative work, three SACHI group members will present their work in progress, demonstrating an innovative method to improving the estimation of user distance.

Work In Progress

- The Potential of Fusing Computer Vision and Depth Sensing for Accurate Distance Estimation

Jakub Dostal, Per Ola Kristensson, and Aaron Quigley

Engaging with the research community is also central to SACHI’s goals, and CHI will be no exception with SACHI members hosting a special interest group and two separate workshops.

Special Interest Group

- Visions and Visioning in CHI: A CHI 2013 Special Interest Group Meeting

Organisers: Aaron Quigley, Alan Dix, Wendy E. Mackay, Hiroshi Ishii, and Jürgen Steimle

Workshops

- Grand challenges in text entry

Organisers: Per Ola Kristensson, Stephen Brewster, James Clawson, Mark Dunlop, Strathclyde, Leah Findlater, Poika Isokoski, Benoît Martin, Antti Oulasvirta, Keith Vertanen, and Annalu Waller- Blended Interaction, Envisioning Future Collaborative Interactive Spaces: A CHI 2013 Workshop

Organisers: Hans-Christian Jetter, Raimund Dachselt, Harald Reiterer, Aaron Quigley, David Benyon, and Michael Haller

SACHI’s will be also be well represented in many of these community meetings with five co-authored workshop papers.

Workshop Papers

- Visual Focus-Aware Applications and Services in Multi-Display Environments. In CHI 2013 Workshop on Gaze Interaction in the Post-WIMP World

Jakub Dostal, Per Ola Kristensson, and Aaron Quigley- Do we need a standard for

evaluating text entry methods? In CHI 2013 Workshop on Grand Challenges in Text EntryAntti Oulasvirta and Per Ola Kristensson.- Combining Touch and Gaze for Distant Selection in a Tabletop Setting. In CHI 2013 Workshop on Gaze Interaction in the Post-WIMP World

Michael Mauderer, Florian Daiber, and Antonio Krüger- Developing Efficient Text Entry Methods for the Sinhalese Language. In CHI 2013 Workshop on Grand Challenges in Text Entry

Shyam Reyal, Keith Vertanen, and Per Ola Kristensson- Authorship in Art/Science Collaboration is Tricky. In CHI 2013 Workshop on Crafting Interactive Systems

Lindsay MacDonald, David Ledo, Miguel A. Nacenta, John Brosz, and Sheelagh Carpendale

Academic service is important to the research community and SACHI will be giving back at this year’s CHI. Professor Quigley is serving as an associate chair in the Interaction Using Specific Capabilities or Modalities subcommittee, as well as serving as a session chair during the event, and Dr Kristensson serves as an associate chair for the Interaction Techniques and Devices subcommittee. After the event, Professor Quigley will staying on for the Program Committee meeting of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp 2013). Finally, to assist in the smooth running of this year’s conference, SACHI will also provide two student volunteers, Jakub Dostal and Jason T. Jacques.

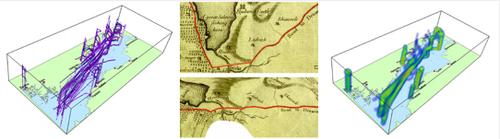

<!–Speaker: Dr Urška Demšar, Centre for GeoInformatics, University of St Andrews

Date/Time: 1-2pm Feb 19, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Recent developments and ubiquitous use of global positioning devices have revolutionised movement analysis. Scientists are able to collect increasingly larger movement data sets at increasingly smaller spatial and temporal resolutions. These data consist of trajectories in space and time, represented as time series of measured locations for each moving object. In geoinformatics such data are visualised using various methodologies, e.g. simple 2D spaghetti maps, traditional time-geography space-time cubes (where trajectories are shown as 3D polylines through space and time) and attribute-based linked views. In this talk we present an overview of typical trajectory visualisations and then focus on space-time visual aggregations for one particular application area, movement ecology, which tracks animal movement.

Bio:

Dr Urška Demšar is lecturer in geoinformatics at the Centre for GeoInformatics (CGI), School of Geography & Geosciences, University of St. Andrews, Scotland, UK. She has a PhD in Geoinformatics from the Royal Institute of Technology (KTH), Stockholm, Sweden and two degrees in Applied Mathematics from the University of Ljubljana, Slovenia. Previously she worked as a lecturer at the National Centre for Geocomputation, National University of Ireland Maynooth, as researcher at the Geoinformatics Department of the Royal Institute of Technology in Stockholm and as a teaching asistant in Mathematics at the Faculty of Electrical Engineering at the University of Ljubljana. Her primary research interests are in geovisual analytics and geovisualisation. She is combining computational and statistical methods with visualisation for knowledge discovery from geospatial data. She is also interested in spatial analysis and mathematical modelling, with one particular application in analysis of movement data and spatial trajectories.

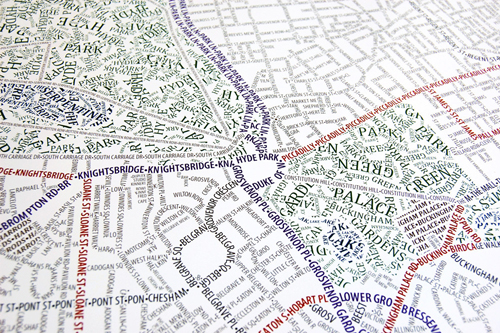

Purposeful Map-Design: What it Means to Be a Cartographer when Everyone is Making Maps

<!–Speaker: David Heyman, Axis Maps

Date/Time: Fri, Feb. 1, 2pm

Location: School III, St Salvator’s Quad, St. Andrews–>

Abstract:

The democratizing technologies of the web have brought the tools and raw-materials required to make a map to a wider audience than ever before. This proliferation of mapping has redefined modern Cartography beyond the general practice of “making maps” to the purposeful design of maps. Purposeful Cartographic design is more than visuals and aesthetics; there is room for the Cartographer’s design decisions at every step between the initial earthly phenomenon and the end map user’s behavior. This talk will cover the modern mapping workflow from collecting and manipulating data, to combining traditional cartographic design with a contemporary UI/UX, to implementing these maps through code across multiple platforms. I will examine how these design decisions are shaped by the purpose of the map and the desire to use maps to clearly and elegantly present the world.

Bio:

David Heyman is the founder and Managing Director of Axis Maps, a global interactive mapping company formed out of the cartography graduate program of the University of Wisconsin. Established in 2006, the goal of Axis Maps has been to bring the tenants and practices of traditional cartography to the medium of the Internet. Since then, they have designed and built maps for the New York Times, Popular Science, Emirates Airlines, Earth Journalism Network, Duke University and many others. They have also released the freely available indiemapper and ColorBrewer to help map-makers all over the world apply cartographic best-practices to their maps. Recently, their series of handmade typographic maps have been a return to their roots of manual cartographic production. David currently lives in Marlborough, Wiltshire.

We are pleased to announce that Professor Aaron Quigley and Dr. Sara Diamond the President of the Ontario College of Art and Design University are the general co-chairs for MobileHCI 2014 the 16th International Conference on Mobile Human-Computer Interaction in Toronto, Canada. Associate Professor Pourang Irani, University of Manitoba invited Aaron to join this Canadian organising committee as an international member.

The chairs, Dr. Sara Diamond and Professor Aaron Quigley, have extensive experience organizing and managing academic conferences. Sara Diamond is President of the Ontario College of Art and Design University (OCAD U), Canada’s premiere university institution targeted at Art and Design and based in Toronto, proposed home for MobileHCI 2014. Aaron is the Chair of Human Computer Interaction at the School of Computer Science, University of St. Andrews, Scotland. He directs the St. Andrews Computer Human Interaction Research Group. Building upon a vast repertoire of resources available locally, nationally and internationally, both chairs look forward to delivering a successful and exciting MobileHCI 2014 event in Toronto.

<!–Speaker: Loraine Clarke, University of Strathclyde

Date/Time: 1-2pm Jan 15, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Interactive exhibits have become highly expected in traditional museums today. The presence of hands-on exhibits in science centres along with our familiarity of high quality media experiences in everyday life has increased our expectations of digital interactive exhibits in museums. Increased accessibility to affordable technology has provided an achievable means to create novel interactive in museums. However, there is a need to question the value and effectiveness of these interactive exhibits in the museum context. Are these exhibits contributing to the desired attributes of a visitors’ experience, social interactions and visitors’ connection with subject matter or hindering these factors? The research focuses specifically on multimodal interactive exhibits and the inappropriate or appropriate combination of modalities applied in interactive exhibits relative to subject matter, context and target audience. The research aims to build an understanding of the relationships between different combinations of modalities used in exhibits with museum visitors experience, engagement with a topic, social engagement and engagement with the exhibit itself. The talk will present two main projects carried out during the first year of the PhD research. The first project presented will describe the design, development and study of a Multimodal painting installation exhibited for 3 months in a children’s cultural centre for children. The second project presented is an on-going study with the Riverside Transport Museum in Glasgow of six existing multimodal installations in the Transport Museum.

Bio:

Loraine Clarke is a PhD student at the University of Strathclyde. Loraine’s research involves examining interaction with existing museum exhibits that engage visitors in multimodal interaction, developing multimodal exhibits and carrying out field based studies. Loraine’s background is in Industrial Design and Interaction Design through industry and academic experience. Loraine has experience in industry relating to the design and production of kayaking paddles. Loraine has some experience as an interaction designer through projects with a software company. Loraine holds a BDes degree in Industrial Design from the National College of Art and Design, Dublin and a MSc in Interactive Media from the University of Limerick.

Dr Urška Demšar (School of Geography and Geosciences) and Dr Miguel Nacenta (SACHI) are looking for a doctoral student to carry out research in trajectory analysis and interaction. For more information see the position announcement.

For further queries feel free to e-mail Miguel or Urska.

Next year Miguel will join the program committee for the 2nd International Symposium on Pervasive Displays 2013 in cooperation with ACM / SIGCHI

“As digital displays become pervasive, they become increasingly relevant in many areas, including advertising, art, sociology, engineering, computer science, interaction design, and entertainment. We invite submissions that report on cutting-edge research in the broad spectrum of pervasive digital displays, from large interactive walls to personal projection, from tablets and mobile phone screens to 3-D displays and tabletops. The symposium on Pervasive Displays welcomes work on all areas pertaining to digital displays”. http://www.pervasivedisplays.org/2013/

Aaron and Per Ola are two of the Associate Chairs for MobileHCI 2013, the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2013) which will be held in Munich, Germany August 27 – 30, 2013.

Aaron and Per Ola are two of the Associate Chairs for MobileHCI 2013, the 15th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI 2013) which will be held in Munich, Germany August 27 – 30, 2013.

“MobileHCI is the world’s leading conference in the field of Human Computer Interaction concerned with portable and personal devices and with the services to which they enable access. MobileHCI provides a multidisciplinary forum for academics, hardware and software developers, designers and practitioners to discuss the challenges and potential solutions for effective interaction with and through mobile devices, applications, and services.” http://www.mobilehci2013.org/ Per Ola is also the workshops co-chair for MobileHCI 2013, the call for workshops 2013 is here.

Aaron  will be joining the Technical Program Committee of the 2013 ACM International Conference on Pervasive and Ubiquitous Computing (UbiComp 2013).

will be joining the Technical Program Committee of the 2013 ACM International Conference on Pervasive and Ubiquitous Computing (UbiComp 2013).

The UbiComp 2013 Program Chairs are Marc Langheinrich, John Canny, and Jun Rekimoto and they said of UbiComp 2013. That it is the first merged edition of the two most renowned conferences in the field: Pervasive and UbiComp. While it retains the “UbiComp” short-name in recognition of the visionary work of Mark Weiser, its long name (and focus) reflects the dual history of the new event, i.e., it seeks to publish any work that one would previously expect to find at either UbiComp or Pervasive. The conference will take place from September 8-12 in Zurich, Switzerland. Aaron has previously served on a number of Pervasive and UbiComp Technical program committees and looks forward to serving on this first joint conference UbiComp TPC which is now the premier forum for Ubiquitous and Pervasive Computing research.

http://www.ubicomp.org/