<!–Speaker: Kyle Montague, University of Dundee

Date/Time: 1-2pm May 14, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Touchscreens are ever-present in technologies today. The large featureless sensors are rapidly replacing the physical keys and buttons on a wide array of digital technologies, the most common is the mobile device. Gaining popularity across all demographics and endorsed for their superior interface soft design flexibility and rich gestural interactions, the touchscreen currently plays a pivotal role in digital technologies. However, just as touchscreens have enabled many to engage with digital technologies, its barriers to access are excluding many others with visual and motor impairments. The contemporary techniques to address the accessibility issues fail to consider the variable nature of abilities between people, and the ever changing characteristics of an individuals impairment. User models for personalisation are often constructed from stereotypical generalisations of the similarities of people with disabilities, neglecting to recognise the unique characteristics of the individuals themselves. Existing strategies for measuring abilities and performance require users to complete exhaustive training exercises that are disruptive from the intended interactions, and result in the creation of descriptions of a users performance for that particular instance.

This research aimed to develop novel techniques to support the continuous measurement of individual user’s needs and abilities through natural touchscreen device interactions. The goal was to create detailed interaction models for individual users, in order to understand the short and long-term variances of their abilities and characteristics. Resulting in the development of interface adaptions that better support interaction needs of people with visual and motor impairments.

Bio:

Kyle Montague is a PhD student based within the School of Computing at the University of Dundee. Kyle works as part of the Social Inclusion through the Digital Economy (SiDE) research hub. He is investigating the application of shared user models and adaptive interfaces to improve the accessibility of digital touchscreen technologies for people with vision and motor impairments.

His doctoral studies explore novel methods of collecting and aggregating user interaction data from multiple applications and devices, creating domain independent user models to better inform adaptive systems of individuals needs and abilities.

Alongside his research Kyle works for iGiveADamn, a small digital design company he set up with a fellow graduate Jamie Shek. Past projects have included iGiveADamn Connect platform for charities, Scottish Universities Sports and the Blood Donation mobile apps. Prior to this he completed an undergraduate degree in Applied Computing at the University of Dundee.

News

<!–Speaker: Patrick Olivier, Culture Lab, Newcastle University

Date/Time: 1-2pm May 7, 2013

Location: 1.33a Jack Cole, University of St. Andrews–>

Abstract:

The purpose of this talk will be to introduce Culture Lab’s past and current interaction design research into digital tabletops. The talk will span our interaction techniques and technologies research (including pen-based interaction, authentication and actuated tangibles) but also application domains (education, play therapy and creative practice) by reference to four Culture Lab tabletop studies: (1) Digital Mysteries (Ahmed Kharrufa’s classroom-based higher order thinking skills application); (2) Waves (Jon Hook’s expressive performance environment for VJs); (3) Magic Land (Olga Pykhtina’s tabletop play therapy tool); and (4) StoryCrate (Tom Bartindale’s collaborative TV production tool). I’ll focus on a number of specific challenges for digital tabletop research, including selection of appropriate design approaches, the role and character of evaluation, the importance of appropriate “in the wild” settings, and avoiding the trap of simple remediation when working in multidisciplinary teams.

Bio:

Patrick Olivier is a Professor of Human-Computer Interaction in the School of Computing Science at Newcastle University. He leads the Digital Interaction Group in Culture Lab, Newcastle’s centre for interdisciplinary practice-based research in digital technologies. Their main interest is interaction design for everyday life settings and Patrick is particularly interested in the application of pervasive computing to education, creative practice, and health and wellbeing, as well as the development of new technologies for interaction (such as novel sensing platforms and interaction techniques).

This year from across SICSA we have at least 16 notes, papers and TOCHI papers being presented at CHI in Paris along with numerous WIPs, workshop papers, SIGs etc. On April 23rd we hosted 35+ people from across SICSA for a Pre-CHI day which allowed all presenters a final dry run of their talks with feedback. This was also an opportunity to inform others across SICSA about their work while allowing everyone an opportunity to snap-shot HCI research in Scotland.

Pre-CHI Day – April 23, 2013: 10am – 4:30pm – Location: Medical and Biological Sciences Building, Seminar Room 1

9:30 – 10:00 Coffee/Tea

10:00 – 10:25 Memorability of Pre-designed and User-defined Gesture Sets M. Nacenta, Y. Kamber, Y. Qiang, P.O. Kristensson (Univ. of St Andrews, UK) Paper

10:25 – 10:50 Supporting Personal Narrative for Children with Complex Communication Needs R. Black (Univ. of Dundee, UK), A. Waller (Univ. of Dundee, UK) R. Turner (Data2Text, UK), E. Reiter (Univ. of Aberdeen, UK) TOCHI paper

10:50 – 11:05 Coffee Break

11:05 – 11:30 Use of an Agile Bridge in the Development of Assistive Technology S. Prior (Univ. of Abertay Dundee, UK). A. Waller (Univ. of Dundee, UK) T. Kroll (Univ. of Dundee, UK), R. Black (Univ. of Dundee, UK) Paper

11:30 – 11:45 Multiple Notification Modalities and Older Users D. Warnock, S. Brewster, M. McGee-Lennon (Univ. of Glasgow, UK) Note

11:45 – 11:55 Visual Focus-Aware Applications and Services in Multi-Display Environments J. Dostal, P.O. Kristensson, A. Quigley (Univ. of St Andrews) Workshop paper (Workshop on Gaze Interaction in the Post-WIMP)

12:00 – 1:00 Lunch Break & Poster Presentations

1:00 – 1:25 ‘Digital Motherhood’: How does technology help new mothers? L. Gibson and V. Hanson (Univ. of Dundee, UK) Paper

1:25 – 1:40 Combining Touch and Gaze for Distant Selection in a Tabletop Setting M. Mauderer (Univ. of St Andrews), F. Daiber (German Research Centre for Artificial Intelligence – DFKI), A. Krüger (DFKI) Workshop paper (Workshop on Gaze Interaction in the Post-WIMP)

1:40 – 2:05 Focused and Casual Interactions: Allowing Users to Vary Their Level of Engagement H. Pohl (Univ. of Hanover, DE) and R. Murray-Smith (Univ. of Glasgow, UK) Paper

2:05 – 2:20 Seizure Frequency Analysis Mobile Application: The Participatory Design of an Interface with and for Caregivers Heather R. Ellis (Univ. of Dundee) Student Research Competition

2:20 – 2:40 Coffee Break

2:40 – 3:05 Exploring & Designing Tools to Enhance Falls Rehabilitation in the Home S. Uzor and L. Baillie (Glasgow Caledonian Univ., UK) Paper

3:05 – 3:30 Understanding Exergame Users’ Physical Activity, Motivation and Behavior Over Time A. Macvean and J. Robertson (Heriot-Watt Univ., UK) Paper

3:30 – 3:45 Developing Efficient Text Entry Methods for the Sinhalese Language S. Reyal (Univ. of St Andrews), K. Vertanen (Montana Tech), P.O. Kristensson (Univ. of St Andrews) Workshop paper (Grand Challenges in Text Entry)

3:45 – 4:10 The Emotional Wellbeing of Researchers: Considerations for Practice W. Moncur (Univ. of Dundee, UK) Paper

4:10 – 4:30 Closing remarks and (optional) pub outing.

<!–Speaker: Maria Wolters, University of Edinburgh

Date/Time: 1-2pm April 2, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

In this talk, I will give an overview of recent work on reminding and remembering that I have been involved in. I will argue two main points.

– Reminding in telehealthcare is not about putting an intervention in place that enforces 100% adherence to the protocol set for the patient by their wise clinicians. Instead, we need to work with users to select cues that will help them remember and that are solidly anchored in their conceptualisation of their own health and abilities, their life, and their home.

– When tracking a person’s mental health, the stigma of being monitored can outweigh the benefits of monitoring. We don’t remember everything perfectly – if we did, that would be pathological. But this is a problem when we’re asked to report our own feelings, activity levels, sleeping patterns, etc. over a period of several days or weeks, which is important for identifying mental health problems. Is intensive monitoring the solution? Only if it is unobtrusive and non-stigmatising.

I will conclude with a short discussion of the EU project Forget-IT that started in February 2013 and looks at contextualised remembering and intelligent preservation of individual data (such as a record of trips a person made or photos they’ve taken) and organisational data (such as web sites).

Bio:

Maria Wolters is a Research Fellow at the University of Edinburgh who works on the cognitive and perceptual foundations of human computer interaction. She specialises in dialogue and auditory interfaces. The main application areas are eHealth, telehealthcare, and personal digital archiving. Maria is the Scientific coordinator of the EU FP7 STREP Help4Mood, which supports the treatment of people with depression in the community, and is a researcher on the EU FP7 IP Forget-IT, which looks at sustainable digital archiving. She used to work on the EPSRC funded MultiMemoHome project, which finished in February

On July 8th, Rónan McAteer from the Watson Solutions Development Software Group in IBM Ireland will give a talk as part of the Big Data Information Visualisation Summer School here in St Andrews. This talk is entitled “Cognitive Computing: Watson’s path from Jeopardy to real-world Big (and dirty) Data.”

While this talk is part of the summer school, we are trying to host it in a venue in central St Andrews during the evening of July 8th so that people from across St Andrews and SICSA can attend if they wish.

The Abstract for Rónan’s talk is below:

Building on the success of the Jeopardy Challenge in 2011, IBM is now preparing Watson for use in commercial applications. At first glance, the original challenge appears to present an open-domain question answering problem. However, moving from the regular, grammatical, well-formed nature of gameshow questions, to the malformed and error-strewn data that exists in the real world, is very much a new and complex challenge for IBM. Unstructured and noisy data in the form of natural language text, coming from sources like instant messaging and recorded conversations, automatically digitised text (OCR), human shorthand notes (replete with their individual sets of prose and typos), must all be processed in a matter of seconds, to find the proverbial ‘needle in the haystack’.

In this talk we’ll take a look at how Watson is rising to meet these new challenges. We’ll go under the hood to take a look at how the system has changed since the days of Jeopardy, using state-of-the-art IBM hardware and software, to significantly reduce the cost while increasing the capability.

<!–Speaker: Jakub Dostal, SACHI, School of Computer Science, University of St Andrews

Date/Time: 1-2pm March 5, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Modern computer workstation setups regularly include multiple displays in various configurations. With such multi-monitor or multi-display setups we have reached a stage where we have more display real-estate available than we are able to comfortably attend to. This talk will present the results of an exploration of techniques for visualising display changes in multi-display environments. Apart from four subtle gaze-dependent techniques for visualising change on unattended displays, it will cover the technology used to enable quick and cost-effective deployment to workstations. An evaluation of the technology as well as the techniques themselves will be presented as well. The talk will conclude with a brief discussion on the challenges in evaluating subtle interaction techniques.

About Jakub:

Jakub’s bio on the SACHI website.

<!–Speaker: Dr Urška Demšar, Centre for GeoInformatics, University of St Andrews

Date/Time: 1-2pm Feb 19, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Recent developments and ubiquitous use of global positioning devices have revolutionised movement analysis. Scientists are able to collect increasingly larger movement data sets at increasingly smaller spatial and temporal resolutions. These data consist of trajectories in space and time, represented as time series of measured locations for each moving object. In geoinformatics such data are visualised using various methodologies, e.g. simple 2D spaghetti maps, traditional time-geography space-time cubes (where trajectories are shown as 3D polylines through space and time) and attribute-based linked views. In this talk we present an overview of typical trajectory visualisations and then focus on space-time visual aggregations for one particular application area, movement ecology, which tracks animal movement.

Bio:

Dr Urška Demšar is lecturer in geoinformatics at the Centre for GeoInformatics (CGI), School of Geography & Geosciences, University of St. Andrews, Scotland, UK. She has a PhD in Geoinformatics from the Royal Institute of Technology (KTH), Stockholm, Sweden and two degrees in Applied Mathematics from the University of Ljubljana, Slovenia. Previously she worked as a lecturer at the National Centre for Geocomputation, National University of Ireland Maynooth, as researcher at the Geoinformatics Department of the Royal Institute of Technology in Stockholm and as a teaching asistant in Mathematics at the Faculty of Electrical Engineering at the University of Ljubljana. Her primary research interests are in geovisual analytics and geovisualisation. She is combining computational and statistical methods with visualisation for knowledge discovery from geospatial data. She is also interested in spatial analysis and mathematical modelling, with one particular application in analysis of movement data and spatial trajectories.

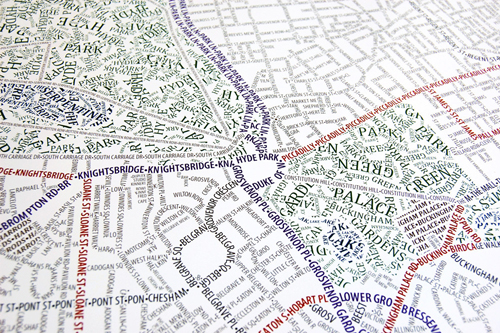

Purposeful Map-Design: What it Means to Be a Cartographer when Everyone is Making Maps

<!–Speaker: David Heyman, Axis Maps

Date/Time: Fri, Feb. 1, 2pm

Location: School III, St Salvator’s Quad, St. Andrews–>

Abstract:

The democratizing technologies of the web have brought the tools and raw-materials required to make a map to a wider audience than ever before. This proliferation of mapping has redefined modern Cartography beyond the general practice of “making maps” to the purposeful design of maps. Purposeful Cartographic design is more than visuals and aesthetics; there is room for the Cartographer’s design decisions at every step between the initial earthly phenomenon and the end map user’s behavior. This talk will cover the modern mapping workflow from collecting and manipulating data, to combining traditional cartographic design with a contemporary UI/UX, to implementing these maps through code across multiple platforms. I will examine how these design decisions are shaped by the purpose of the map and the desire to use maps to clearly and elegantly present the world.

Bio:

David Heyman is the founder and Managing Director of Axis Maps, a global interactive mapping company formed out of the cartography graduate program of the University of Wisconsin. Established in 2006, the goal of Axis Maps has been to bring the tenants and practices of traditional cartography to the medium of the Internet. Since then, they have designed and built maps for the New York Times, Popular Science, Emirates Airlines, Earth Journalism Network, Duke University and many others. They have also released the freely available indiemapper and ColorBrewer to help map-makers all over the world apply cartographic best-practices to their maps. Recently, their series of handmade typographic maps have been a return to their roots of manual cartographic production. David currently lives in Marlborough, Wiltshire.

<!–Speaker: Loraine Clarke, University of Strathclyde

Date/Time: 1-2pm Jan 15, 2013

Location: 1.33a Jack Cole, University of St Andrews–>

Abstract:

Interactive exhibits have become highly expected in traditional museums today. The presence of hands-on exhibits in science centres along with our familiarity of high quality media experiences in everyday life has increased our expectations of digital interactive exhibits in museums. Increased accessibility to affordable technology has provided an achievable means to create novel interactive in museums. However, there is a need to question the value and effectiveness of these interactive exhibits in the museum context. Are these exhibits contributing to the desired attributes of a visitors’ experience, social interactions and visitors’ connection with subject matter or hindering these factors? The research focuses specifically on multimodal interactive exhibits and the inappropriate or appropriate combination of modalities applied in interactive exhibits relative to subject matter, context and target audience. The research aims to build an understanding of the relationships between different combinations of modalities used in exhibits with museum visitors experience, engagement with a topic, social engagement and engagement with the exhibit itself. The talk will present two main projects carried out during the first year of the PhD research. The first project presented will describe the design, development and study of a Multimodal painting installation exhibited for 3 months in a children’s cultural centre for children. The second project presented is an on-going study with the Riverside Transport Museum in Glasgow of six existing multimodal installations in the Transport Museum.

Bio:

Loraine Clarke is a PhD student at the University of Strathclyde. Loraine’s research involves examining interaction with existing museum exhibits that engage visitors in multimodal interaction, developing multimodal exhibits and carrying out field based studies. Loraine’s background is in Industrial Design and Interaction Design through industry and academic experience. Loraine has experience in industry relating to the design and production of kayaking paddles. Loraine has some experience as an interaction designer through projects with a software company. Loraine holds a BDes degree in Industrial Design from the National College of Art and Design, Dublin and a MSc in Interactive Media from the University of Limerick.

<!–Speaker: John McCaffery, University of St. Andrews

Date/Time: 2-3pm Dec 12, 2012

Location: 1.33a Jack Cole, University of St Andrews –>

Abstract:

Open Virtual Worlds are a platform of several advantages. They provide an out of the box mechanism for content creation, distributed access and programming. They are open source so can be manipulated as necessary. There is also a large amount of content that has already been created within a Virtual World. As such, in the field of HCI experimentation they provide an interesting opportunity. When experimenting with novel modes of interaction prototypes can be created within a Virtual World relatively easily. Once the prototype has been created, users can be put into use case scenarios based around existing content. Alternatively, custom environments with very constrained parameters can quickly be created for controlled experimentation.

This talk will cover some of the interaction modes currently being experimented with by the OpenVirtualWorlds group.

Bio:

John McCaffery is a PhD student in the Open Virtual Worlds group. John works on investigating how the open frameworks for distributing, programming and manipulating 3D data provided by Open Virtual Worlds can be used to provide a model for how the 3D web may develop. Open Virtual Worlds is a general term for open source, open protocol client / server architectures for streaming and modifying 3D data. Examples include the SecondLife viewer and its derivatives and the SecondLife and OpenSim server platforms. John’s work includes investigating how the programming possibilities of Virtual Worlds can be extended and how Virtual World access can be modified to provide new experiences and new experimental possibilities, built around existing content. For more information on John’s Work see his research blog.