Our lab (SACHI) in the University of St. Andrews last year applied for, and was selected to receive the Project Soli alpha developer kit along with 60 other groups around the world. Project Soli is a Radar based sensor that can sense micro and subtle motion of human fingers. You can see more about this project here: https://atap.google.com/soli/

As human fingers are dexterous and highly expressive, Soli Radar based sensing is rich, it unlocks a broad potential for gesture interaction with computers, mobile phones or wearable devices.

When we received the developer kit (thank you Google!), we were interested to explore constrained interaction on smartwatches. Today, using a smartwatch inevitably requires two hands which is very troublesome, especially if your other hand is busy or unavailable.

As part of our systematic exploration of the Soli we happened upon the discovery and idea of “object detection” with the Soli, or “serendipity” as the head of Soli team Ivan Poupyrev calls it. We discovered the Soli sensor, with suitable algorithmic inference, can be used to recognize the materials and objects that it is touching. In simple terms, the radar signals are reflected by different objects with different return signal characteristics, due to the material, shape and geometry. We have developed a machine learning technique to train and classify the different objects presented to the Soli.

Our project was selected and featured in the official alpha developer video released by Google ATAP (see the video above), which was also shown on stage during the ATAP session in Google I/O 2016 on May 20, 2016.

To get an overview of our work, take a look at the video for a quick glimpse of what we did (10 seconds long, the first project about object recognition). There is more to come on what we did with this, and what other types of recognition we were able to achieve and what this means for HCI, which we hope to publish and share in in the coming months.

We know, of course, there are considerable amounts of related work with radar signals to recognise objects. However, we believe this is the first time that it has been achieved, in real time, at this scale, with consumer ready devices and as a new means to achieve advanced interaction.

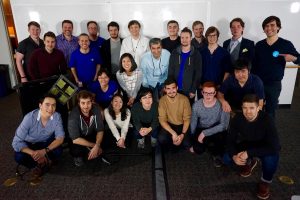

In January of 2016, our team was selected as 1 of the 7 teams to attend a Soli workshop in Google in March 2016. It was a Google supported trip so we really appreciate the kindness of the Project Soli team to allow us to show what we had achieved.

Here you can see some pictures during the workshop

We were glad and thankful to everyone involved in the project, and thanks to the Soli team for their great work and support!

Our team consisted of Hui-Shyong Yeo (a PhD student in SACHI), Patrick Schrempf (a 2nd year CS student), Gergely Flamich (a 2nd year CS student), Dr David Harris-Birtill (a senior research fellow in SACHI) and Professor Aaron Quigley.

For more details please contact:

Hui-Shyong Yeo hsy@st-andrews.ac.uk

or

Dr David Harris-Birtill dcchb@st-andrews.ac.uk

Links with Soli object recognition:

Google ATAP Soli Developers

Engadget

Google controls a smartwatch with radar-powered finger gestures

SlashGear

See Google’s Soli make Apple Watch look like a relic

TechCrunch

Google’s ATAP is bringing its Project Soli radar sensor to smartwatches and speakers

Yahoo Tech

Google’s Soli could give your next smartwatch gesture controls

TechRadar

Google is bringing better gesture controls to your smartwatch

HotHardware

Google Project Soli Controls A Smartwatch With Radar-Guided Hand Gestures

DigitalTrends

Google and LG built a gesture-controlled smartwatch using Project Soli

PhoneArena

Google demos hands-free smartwatch control with Project Soli radar tech

AndroidHeadlines

Google’s Project Soli Brings a Tiny Radar to your Smartwatch