Radar and object recognition

The Soli radar, from Google ATAP, was designed to track micro finger motion for enabling gesture interaction with computing devices. First shown at Google I/O ’15, Soli introduces a new sensing technology that uses miniature radar to detect touchless gesture interactions.

2018 – 2019

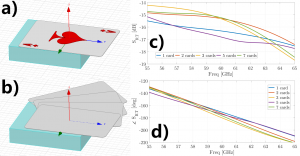

In 2018 we published a paper entitled “Exploring Tangible Interactions with Radar Sensing” published in the Journal Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies Volume 2 Issue 4, December 2018. Research has explored miniature radar as a promising sensing technique for the recognition of gestures, objects, users’ presence and activity. However, within Human-Computer Interaction, its use remains underexplored, in particular in Tangible User Interfaces. In our 2018 IMWUT paper we explore two research questions with radar as a platform for sensing tangible interaction with the counting, ordering, identification of objects and tracking the orientation, movement and distance of these objects. We detail the design space and practical use cases for such interaction, which allows us to identify a series of design patterns, beyond static interaction, that are continuous and dynamic. This exploration is grounded in both a characterisation of the radar sensing and our rigorous experiments, which show that such sensing is accurate with minimal training. The advent of low-cost miniature radar being deployed in day-to-day settings opens new forms of object and material interactions as we demonstrate in this work.

2018/2019 Links

- Hui-Shyong Yeo, Ryosuke Minami, Kirill Rodriguez, George Shaker, and Aaron Quigley. 2018. Exploring Tangible Interactions with Radar Sensing. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2, 4, Article 200 (December 2018), 25 pages. DOI: https://doi.org/10.1145/3287078

- YouTube Link: https://www.youtube.com/watch?v=FCi56T8X-eY

- Dataset for Exploring Tangible Interactions with Radar Sensing: https://github.com/tcboy88/solinteractiondata

2019 Press

Background

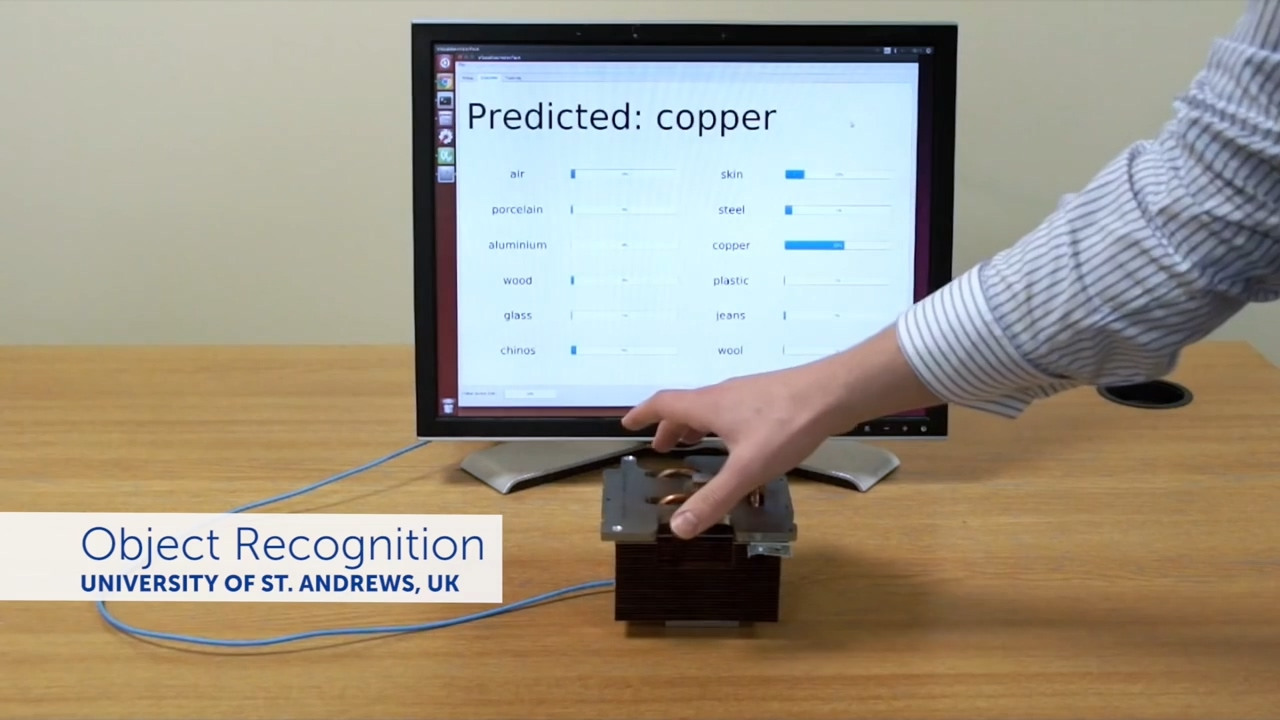

As one of the few sites to receive the Google ATAP Soli AlphaKit in 2015 we have discovered, developed and tested a new and innovative use for Soli, namely, object and material recognition in a project we call RadarCat. To achieve this, we have used the Soli sensor, along with our recognition software to train and classify different materials and objects, in real time, with very high accuracy. A short snippet of this work was included in the Google ATAP Soli Alpha developers kit video shown at Google I/O ’16.

2016

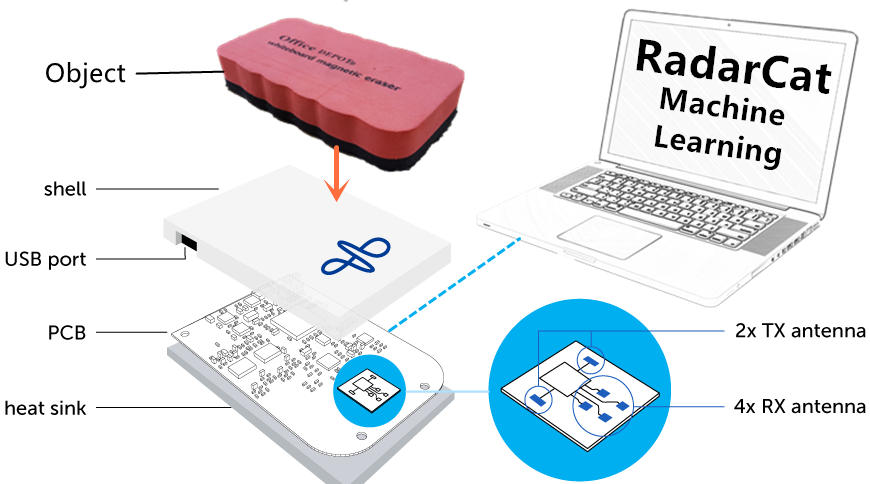

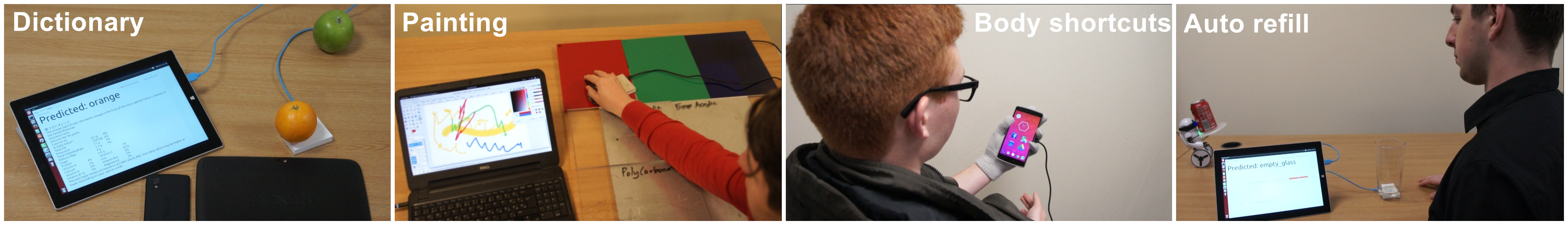

RadarCat (Radar Categorization for Input & Interaction) is a small, versatile radar-based system for material and object classification which enables new forms of everyday proximate interaction with digital devices. In this work we demonstrate that we can train and classify different types of objects which we can then recognize in real time. Our studies include everyday objects and materials, transparent materials and different body parts. Our videos demonstrate four working examples including a physical object dictionary, painting and photo editing application, body shortcuts and automatic refill based on RadarCat.

30 second teaser:

Full video:

Curated video by Futurism, with more than 1 million views!

Talk at UIST 2016:

Beyond human computer interaction, RadarCat also opens up new opportunities in areas such as navigation and world knowledge (e.g., low vision users), consumer interaction (e.g., checkout scales), industrial automation (e.g., recycling), or laboratory process control (e.g., traceability).

Our novel sensing approach exploits the multi-channel radar signals, emitted from a Project Soli sensor, that are highly characteristic when reflected from everyday objects.

Our current studies demonstrates that our approach of classification of radar signals using random forest classifier is robust and accurate.

2016 Links

- Yeo, H.-S., Flamich, G., Schrempf, P., Harris-Birtill, D., and Quigley, A. (2016) RadarCat: Radar Categorization for Input & Interaction. In Proceedings of the 29th Annual ACM Symposium on User Interface Software and Technology New York, NY, USA: ACM UIST ’16. Open Access.

- Our May 2016 blog post on work at Google I/O ’16

- Video shown by ATAP at Google I/O 16

2016 Press