Proximity/Gaze Awareness

Modern computer setups regularly include multiple displays in various configurations. With such multi-monitor or multi-display setups we have reached a stage where we have more display real-estate available than we are able to comfortably attend to. While the benefits of large or multi-display setups have been demonstrated in previous work, it has also been suggested that this increase in display space will lead to usability problems, window management difficulties and issues related to information overload. In general, the increased display real-estate afforded by multi-display setups means that users are unable to attend to all of it at once. In particular, this point is reached when the total display area is so large that it does not fit within the user’s field of vision. In this case, the user has to substantially turn their head to see different parts of the display environment.

In addition, increasing screen real-estate allows for the development of applications where a single user can manage a large amount of data and related tasks through a distributed user interface. However, such users can easily become overloaded and become unaware of display changes as they alternate their attention towards different displays. In addition we can exploit people’s movements and distance to the data display and to collaborators. Proxemic interaction can potentially support such scenarios in a fluid and seamless way, supporting both tightly coupled collaboration as well as parallel explorations.

Our research in SACHI on Proximity and Gaze Aware Interfaces, has tackled these questions by developing toolkits, new algorithms, new forms of interaction, new means of feedback along with the exploration of subtle feedback as you can see in the publication below. For example, Diff Displays helps users of single or multiple displays (eg. monitors) to quickly gauge what has changed since they last looked at a display. Displays which we are using but which we are not currently looking at are called “unattended displays”.

Videos

Publications

Sebastian Boring, Saul Greenberg, Jo Vermeulen, Jakub Dostal, Nicolai Marquardt. The Dark Patterns of Proxemic Sensing. In IEEE Computer, Volume 47, Issue 8, August, 2014. 56-60, doi:10.1109/MC.2014.223. [article on publisher’s website]

Saul Greenberg, Sebastian Boring, Jo Vermeulen, Jakub Dostal. Dark Patterns in Proxemic Interactions: A Critical Perspective. In Proceedings of the 10th ACM Conference on Designing Interactive Systems (DIS 2014), ACM Press, 2014. [Best Paper Award]

Garrido, J, Penichet, V, Lozano, M , Quigley, AJ & Kristensson, PO 2014, ‘ AwToolkit: attention-aware user interface widgets ‘. in roceedings of the 12th ACM International Working Conference on Advanced Visual Interfaces (AVI 2014). ACM Press – Association for Computing Machinery. [Best Paper Award]

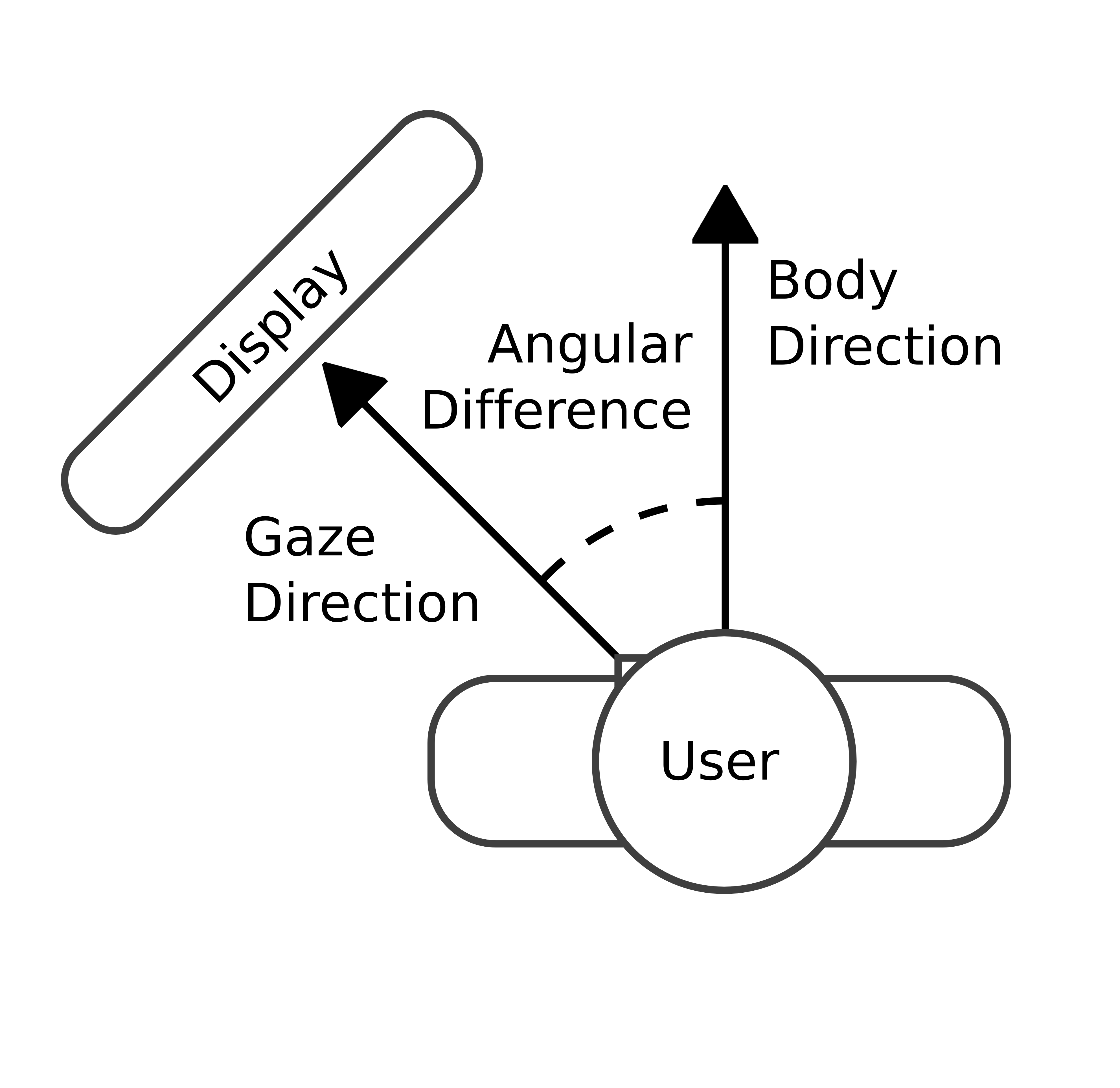

Dostal, J , Kristensson, PO & Quigley, AJ 2014, ‘Estimating and using absolute and relative viewing distance in interactive systems‘ Pervasive and Mobile Computing , vol 10, no. Part B, pp. 173-186.

Dostal, J , Hinrichs, U ,Kristensson, PO & Quigley, AJ 2014, ‘ SpiderEyes: designing attention- and proximity-aware collaborative interfaces for wall-sized displays ‘. in Proceedings of the 19th ACM International Conference on Intelligent User Interfaces (IUI 2014). ACM Press – Association for Computing Machinery, pp. 143-152.

Dostal, J , Kristensson, PO & Quigley, AJ 2013, ‘ Multi-view proxemics: distance and position sensitive interaction ‘. in Proceedings of the 2nd ACM International Symposium on Pervasive Displays (PerDis ’13). ACM Press – Association for Computing Machinery, New York, pp. 1-6, 2nd ACM International Symposium on Pervasive Displays, Mountain View, United States, 4-5 June.

Dostal, J , Kristensson, PO & Quigley, AJ 2013, ‘ The potential of fusing computer vision and depth sensing for accurate distance estimation ‘. in In Extended Abstracts of the 31st ACM Conference on Human Factors in Computing Systems (CHI 2013). ACM Press – Association for Computing Machinery, pp. 1257-1262.

Dostal, J , Kristensson, PO & Quigley, AJ 2013, ‘ Subtle gaze-dependent techniques for visualising display changes in multi-display environments ‘. in Proceedings of the 18th ACM International Conference on Intelligent User Interfaces (IUI 2013). ACM Press – Association for Computing Machinery.

Jakub Dostal, Per Ola Kristensson and Aaron Quigley. Visual Focus-Aware Applications and Services in Multi-Display Environments. In CHI ’13 Workshop on Gaze Interaction in the Post-WIMP World, 2013. [PDF]

Per Ola Kristensson, Jakub Dostal and Aaron Quigley. Designing Mobile Computer Vision Applications for the Wild: Implications on Design and Intelligibility. In Pervasive Intelligibility: the Second Workshop on Intelligibility and Control in Pervasive Computing, 2012. [PDF]

Jakub Dostal and Aaron Quigley. There Is More to Multimodal Interfaces than Speech, Vibration and Position: State of the Art in Multimodal Interfaces for People with Disabilities. In Proceedings of the 2010 Irish Human Computer Interaction Conference, 2010. [PDF]