An interactive session for Doors Open 2025, hosted by SACHI & the IDEA Network

On 1 May 2025, we hosted a small but energised session in the Jack Cole building as part of the School of Computer Science’s Doors Open programme. Our aim? To invite people into a conversation we believe needs to be more public, more grounded, and more collaborative: how can universities and communities work together to shape a more ethical digital future?

This session, Co-Designing Ethical Digital Futures: The University–Community Gateway, was co-organised by Abd Alsattar Ardati, researchers from SACHI (our Human-Computer Interaction group here at St Andrews) and the IDEA Network, a cross-university initiative working on community-led research and open knowledge.

Together, we welcomed participants into a relaxed and interactive 45-minute workshop built around one simple premise: the technologies shaping our lives should reflect the values, needs, and voices of the people most impacted by them.

Why we did this

Digital transformation isn’t just about tools and platforms, it’s about power, participation, and who gets to shape the future. With Scotland’s Ethical Digital Nation vision now in motion, and research across our university increasingly engaged with questions of inclusion, AI fairness, and civic trust, we felt this was the right time to bring these discussions into the open, with communities at the centre.

We wanted to have a session that people could participate in and be active in trying out a powerful design process. Specifically, the session focused on co-design, a method grounded in listening, participation, and collective imagination. It’s about designing with, not for.

What we did

We started with a short talk about the evolving role of universities in society, from traditional centres of learning to more civic, collaborative institutions. We shared a few examples of how SACHI and the IDEA Network are exploring questions around digital accessibility, inclusion, and how communities can help shape the systems they interact with every day.

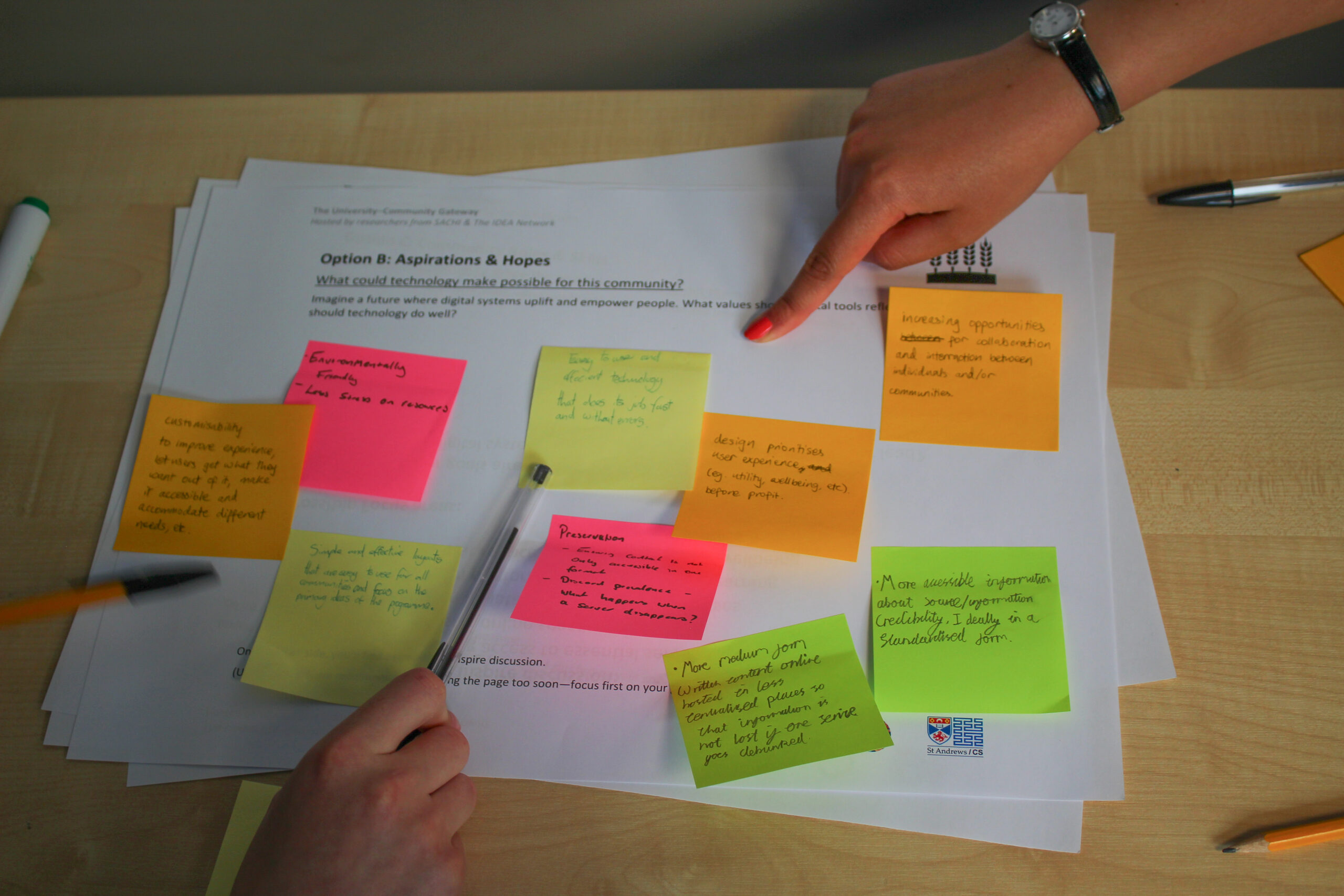

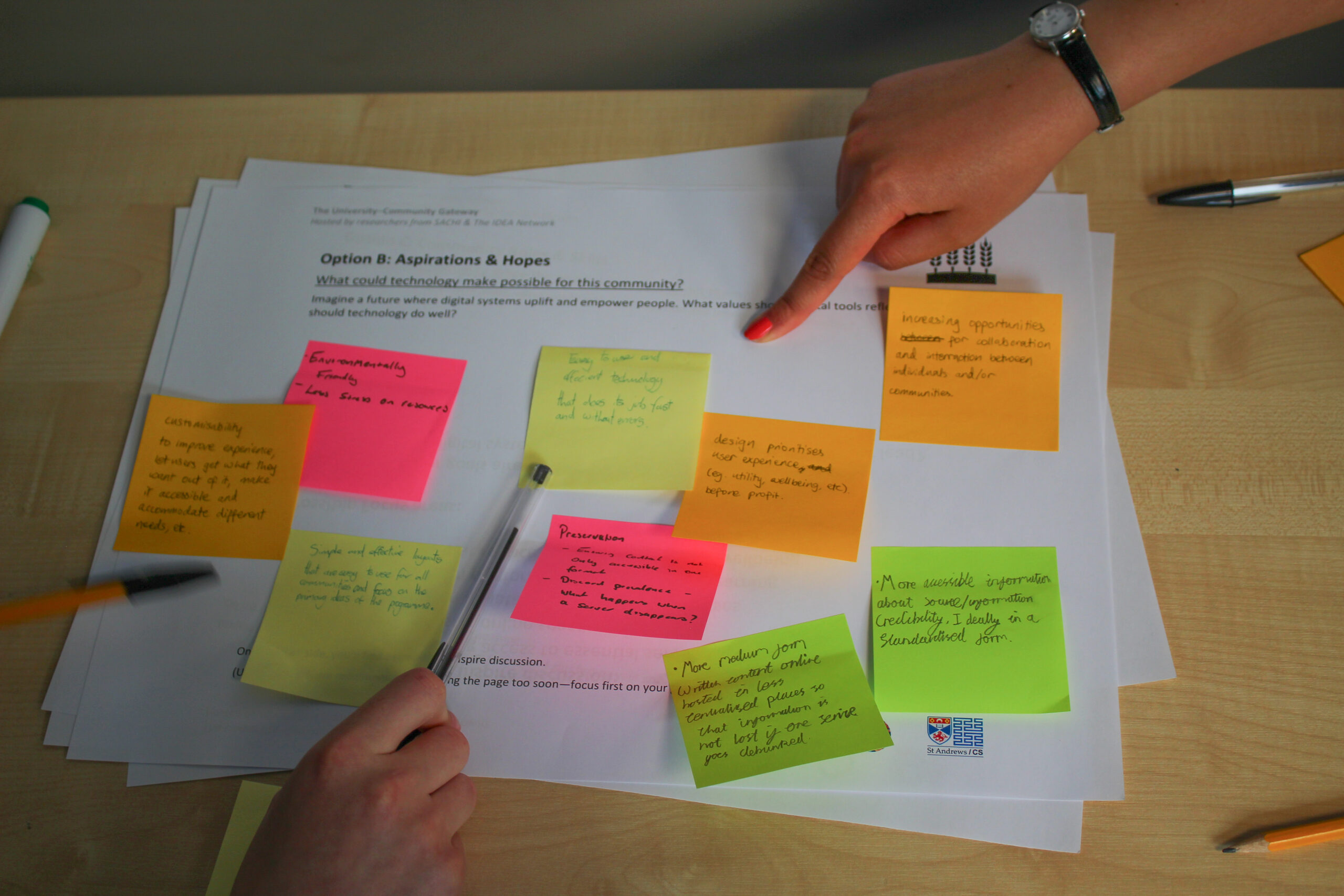

Then we moved into group activities. Participants chose a community they cared about, young people, people in later life, rural communities, disabled people, newcomers to the UK, and more. Within those groups, they explored one of four themes:

Each table had a simple prompt card, coloured markers, and a big piece of paper to map their ideas. No pressure to produce perfect solutions; just space to think together, share perspectives, and imagine something better.

What came up

What struck us most was how quickly the room filled with energy, honesty, and curiosity. People named real, lived issues: confusing online services, lack of representation in digital design, barriers to accessing support, or simply not feeling heard by institutions.

But they also shared hope, about what digital systems could do if they were designed differently. From inclusive education tools to community-owned tech platforms, the notes and reflections at the end captured a mix of practical insight and ambitious imagination.

We’re still going through the ideas shared (some of which we hope to write up more formally), but one thing is clear: when you invite people in, not just to speak, but to shape, you get a richer, more grounded vision of what ethical tech could be.

Where next?

This session is part of a wider effort across SACHI and the IDEA Network to open up conversations about technology, power, and participation; and to make co-design a more normal part of how we do research and innovation.

We’re grateful to everyone who showed up, listened, spoke, drew, and reflected with us. And especially to the SACHI volunteers who helped make it happen with such care and generosity.

If you’re curious about this work, want to get involved, or just want to keep in touch, we’d love to hear from you.

IDEA Network: ideanetwork@st-andrews.ac.uk

SACHI: sachi@st-andrews.ac.uk

Let’s keep making spaces for public imagination, ethical questions, and community-led futures.